The paper shown in the header image is generated with ChatGPT.

🔴The author openly acknowledges this and also adds some commentary of his own.

🔴The paper contains no references.

🔴Should this type of paper be in the scientific literature?

Here is some further information and some thoughts we had.

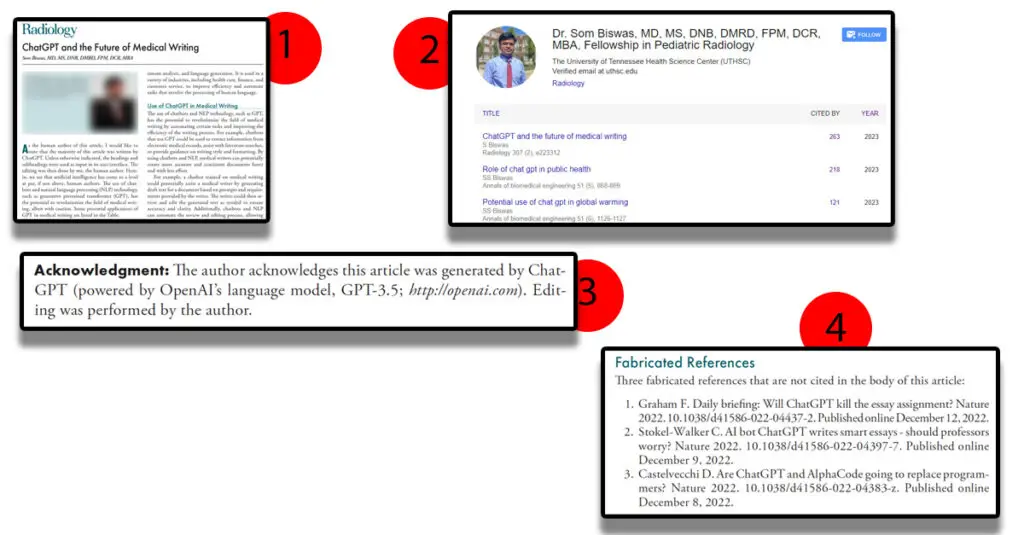

1️⃣The paper we are looking at (see 1 in the image) is “ChatGPT and the Future of Medical Writing” by Som Biswas. You can access the paper here: https://doi.org/10.1148/radiol.223312.

2️⃣We chose this paper after seeing a tweet by @MishaTeplitskiy. Unfortunately, his tweet has since been been deleted, so we cannot link to it.

3️⃣Essentially the tweet says that an author (Som Biswas) has published a whole load of papers in 2023 that have a similar theme. That is “ChatGPT and the [insert domain here]“.

4️⃣You can see the Google Scholar profile of the author (see 2 on the image). This profile appears to have been deleted but we archived it on Wayback Machine: https://web.archive.org/web/20231216094207/https://scholar.google.com/citations?hl=en&user=37CkqwcAAAAJ

5️⃣If you look at the paper, the author acknowledges that he used ChatGPT to write the paper (see 3 on the image). Not only is this acknowledged in the acknowledgments section but it is also stated in the paper itself. This is a credit to the author.

6️⃣The paper contains zero references. There is a section called “Fabricated References” (see 4 on the image).

The text in the paper says “Three fabricated references that are not cited in the body of this article:” We are not quite sure what this means. Does it mean that ChatGPT generated these references but the author just noted them, but did not use them in the body of the article?

Just to note, the references are valid papers.

7️⃣This paper has been cited 263 times (see 2 in the image). This seems a lot for a paper published in 2023 (and this figure was captured on 16 Dec 2023). We are not suggesting anything is wrong but it does suggest that a deeper investigation might be worthwhile.

8️⃣This paper does acknowledge the use of ChatGPT in writing the paper but

i) do all his similar papers do this and

ii) if the author says he edited the ChatGPT generated text, is there any way of know how much editing has been done?

So what?

So, why are we raising this? Here are a few things, you might want to think about.

The point of the original tweet was to raise the fact that this is a prolific author, especially in 2023.

Many of the papers are around ChatGPT and how it relates to a given domain. They are all (we think – we need to do a full investigation) generated by ChatGPT.

Our question is “What is to stop anybody generating papers using ChatGPT, on any subject, and publishing it?“

Are we entering a time, when anybody can publish in the scientific literature just by using a large language model?

This is especially true, when there is not literature review/related work section and the paper contains no references.

Final Note: This article is based on one of our previous tweets.