Introduction

As well as using generative AI (Artificial Intelligence) to help write papers, there is also active research which looks at ways to detect papers that have been written using AI tools. This article highlights two such papers.

ChaptGPT and Large Language Models (LLM)

ChatGPT, and other LLM tools, are already having a massive impact on the world at large and the scientific community is, if anything, more affected than many other sectors.

Detecting papers written with AI Tools

There is no doubt that AI tools can be of benefit to those writing scientific papers, but there is a growing need to detect when these tools have been used.

Why? There are many reasons, but one is being able to check papers when authors have not declared when they have used Generative AI to assist in their paper.

Many journals ask that the use of AI is declared. Like plagiarism tools, it would be useful to have a tool to check whether the paper has, even partly, been written using one of the multitude of AI tools that are now available.

But, detecting AI generated text is not an easy task, which has given rise to research on this topic.

Two recent papers

These two papers, are just a couple of recent examples of papers that is addressing this topic.

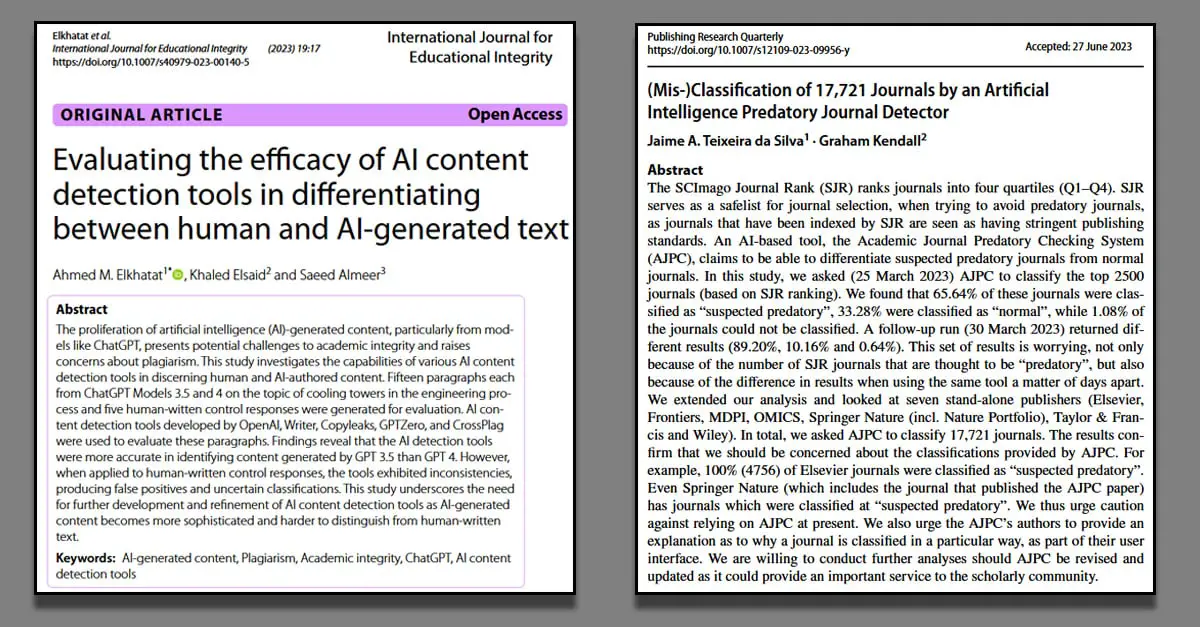

1) Evaluating the efficacy of AI content detection tools in differentiating between human and AI-generated text (DOI: https://doi.org/10.1007/s40979-023-00140-5)

Abstract: The proliferation of artificial intelligence (AI)-generated content, particularly from models like ChatGPT, presents potential challenges to academic integrity and raises concerns about plagiarism. This study investigates the capabilities of various AI content detection tools in discerning human and AI-authored content. Fifteen paragraphs each from ChatGPT Models 3.5 and 4 on the topic of cooling towers in the engineering process and five human-witten control responses were generated for evaluation. AI content detection tools developed by OpenAI, Writer, Copyleaks, GPTZero, and CrossPlag were used to evaluate these paragraphs. Findings reveal that the AI detection tools were more accurate in identifying content generated by GPT 3.5 than GPT 4. However, when applied to human-written control responses, the tools exhibited inconsistencies, producing false positives and uncertain classifications. This study underscores the need for further development and refinement of AI content detection tools as AI-generated content becomes more sophisticated and harder to distinguish from human-written text.

2) (Mis‑)Classification of 17,721 Journals by an Artificial Intelligence Predatory Journal Detector (DOI: https://doi.org/10.1007/s12109-023-09956-y)

Abstract: The SCImago Journal Rank (SJR) ranks journals into four quartiles (Q1–Q4). SJR

serves as a safelist for journal selection, when trying to avoid predatory journals, as journals that have been indexed by SJR are seen as having stringent publishing standards. An AI-based tool, the Academic Journal Predatory Checking System (AJPC), claims to be able to differentiate suspected predatory journals from normal journals. In this study, we asked (25 March 2023) AJPC to classify the top 2500 journals (based on SJR ranking). We found that 65.64% of these journals were classified as “suspected predatory”, 33.28% were classified as “normal”, while 1.08% of the journals could not be classified. A follow-up run (30 March 2023) returned different results (89.20%, 10.16% and 0.64%). This set of results is worrying, not only because of the number of SJR journals that are thought to be “predatory”, but also because of the difference in results when using the same tool a matter of days apart. We extended our analysis and looked at seven stand-alone publishers (Elsevier, Frontiers, MDPI, OMICS, Springer Nature (incl. Nature Portfolio), Taylor & Francis and Wiley). In total, we asked AJPC to classify 17,721 journals. The results confirm that we should be concerned about the classifications provided by AJPC. For example, 100% (4756) of Elsevier journals were classified as “suspected predatory”. Even Springer Nature (which includes the journal that published the AJPC paper) has journals which were classified at “suspected predatory”. We thus urge caution against relying on AJPC at present. We also urge the AJPC’s authors to provide an explanation as to why a journal is classified in a particular way, as part of their user interface. We are willing to conduct further analyses should AJPC be revised and updated as it could provide an important service to the scholarly community.

serves as a safelist for journal selection, when trying to avoid predatory journals, as journals that have been indexed by SJR are seen as having stringent publishing standards. An AI-based tool, the Academic Journal Predatory Checking System (AJPC), claims to be able to differentiate suspected predatory journals from normal journals. In this study, we asked (25 March 2023) AJPC to classify the top 2500 journals (based on SJR ranking). We found that 65.64% of these journals were classified as “suspected predatory”, 33.28% were classified as “normal”, while 1.08% of the journals could not be classified. A follow-up run (30 March 2023) returned different results (89.20%, 10.16% and 0.64%). This set of results is worrying, not only because of the number of SJR journals that are thought to be “predatory”, but also because of the difference in results when using the same tool a matter of days apart. We extended our analysis and looked at seven stand-alone publishers (Elsevier, Frontiers, MDPI, OMICS, Springer Nature (incl. Nature Portfolio), Taylor & Francis and Wiley). In total, we asked AJPC to classify 17,721 journals. The results confirm that we should be concerned about the classifications provided by AJPC. For example, 100% (4756) of Elsevier journals were classified as “suspected predatory”. Even Springer Nature (which includes the journal that published the AJPC paper) has journals which were classified at “suspected predatory”. We thus urge caution against relying on AJPC at present. We also urge the AJPC’s authors to provide an explanation as to why a journal is classified in a particular way, as part of their user interface. We are willing to conduct further analyses should AJPC be revised and updated as it could provide an important service to the scholarly community.

Finally

If you are interested in this area of research, these two papers might be good starting points, especially if you also access the papers that they cite.