Introduction

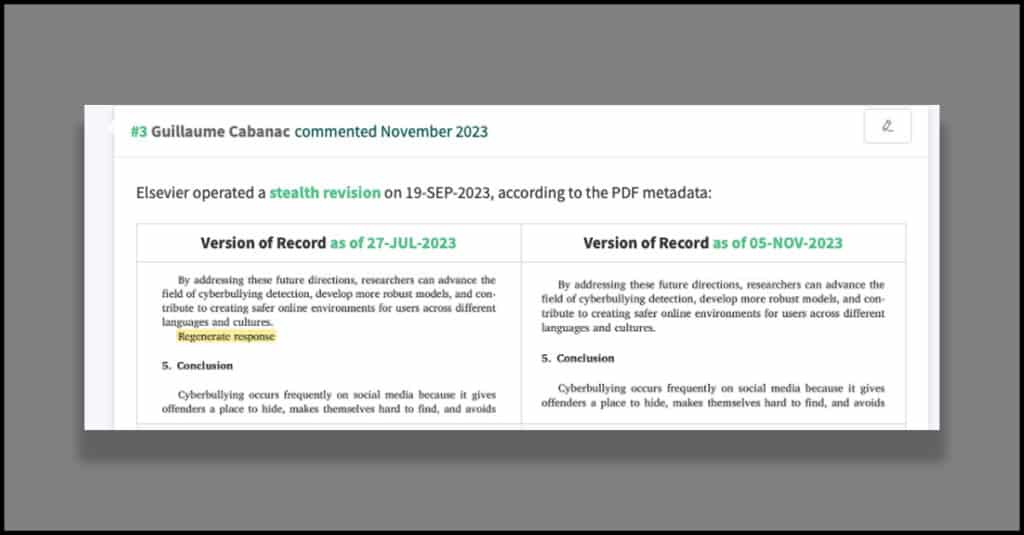

In an X (tweet) Guillaume Cabanac raised an issue that Elsevier had published a paper and then updated the published version of the paper. Elsevier did not explain why the paper had been changed and they did not do the update in a way that most people would expect.

Elsevier ignored that the authors had used an AI tool when writing their paper, yet failed to acknowledge it. This goes against the publishers guidelines.

Further details are below.

"Regenerate Response"

The paper contained the phrase “Regenerate response“, which is a standard (re-)prompt to AI chatbots (such as ChatGPT).

If the authors copied AI generated text and failed to delete their prompt to regenerate some of the text, it is evidence that they had used a generative AI tool. This is subject to context, as some papers will use the term legitimately, for example, when carrying out research into this aspect of generative AI.

In this case, the authors had used an AI tool (by their own admission), but did not acknowledge it in the submission to the journal.

The paper was published, (with the “regenerate response” pharse in it – see the header image of this article), but the paper was changed to remove that phrase, without explaining why the paper had been updated.

PubPeer

The change in the paper was noticed and was raised on the PubPeer platform. You can see the PubPeer comment here.

Once this was brought to the attention of the journal, they simply updated the paper.

Updating a scientific paper

Once a peer reviewed, scientific article has been published, it should not be changed. The correct way to address errors is to issue an erratum, in a future issue of the journal.

This was not done in this case. This means that different people could be looking at different versions of the paper, which should never happen with articles that are in the scientific archive.

It is an important principle that anybody with access to a peer reviewed, scientific paper is reading the same version of that paper as everybody else. This is the reason why you should issue an erratum if an error is found.

Our comments/thoughts

- Why did Elsevier just change the paper, without any explanation? They should have done this via a corrigendum or an erratum, surely?

- The fact the authors used an #LLM and did not acknowledge it goes against the publishers guidelines. Should this be an automatic retraction?

- The publishers have removed the “regenerate response” but there is still no ackowledgement in the paper, from the authors, that an AI tool has been used. Therefore, they are still in breach of the publishers guidelines.

- Perhaps the publishers were embarassed that this had slipped through the peer review net and they thought that the best way to deal with it was to simply update the paper, surely “nobody would notice?“. Actually they did.

- Perhaps the publishers thought that it was just a minor change and it was not a big issue to update the paper? The change may have been minor, but (in our view) you should still not change a paper that has been published.

- What sort of message does this send when one of our leading publishers appears to be complicit in unethical publishing. If legitimate publishers are acting unethically, what hope is there for the integrity of the scientific archive?

- On their author guidelines (archived here) Elsevier says:

“Authors should disclose in their manuscript the use of AI and AI-assisted technologies and a statement will appear in the published work. Declaring the use of these technologies supports transparency and trust between authors, readers, reviewers, editors, and contributors and facilitates compliance with the terms of use of the relevant tool or technology.“

- They also state (you need to click one of the links (i.e. “In which section of the manuscript should authors disclose the use of AI-assisted technologies, and where will this statement appear in the chapter or work?“) at the bottom of the page to see this text).

“We ask authors who have used AI or AI-assisted tools to insert a statement at the end of their manuscript, immediately above the references, entitled ‘Declaration of Generative AI and AI-assisted technologies in the writing process’. In that statement, we ask authors to specify the tool that was used and the reason for using the tool. We suggest that authors follow this format when preparing their statement:

During the preparation of this work the author(s) used [NAME TOOL / SERVICE] in order to [REASON]. After using this tool/service, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication.“

- As Guillaume Cabanac (@gcabanac) points out in his PubPeer comments, the authors did not acknowledge that they used an #LLM, and still don’t.

- The fact that Elsevier acknowledged that an #LLM was used, means that they also accept that the authors went against the guidelines they specify. Surely, by simply changing the article raises a lot of concerns.

===

You can see the original tweet from Guillaume Cabanac here. Our tweet, in response and on which this article is based, can be seen here.