Our tweet

In one of our tweets we said that only acknowledging the use of ChatGPT and, by extension, the use of Large Language Models is not enough.

The issue

Most journals, if not all, now require authors to declare the use of Large Language Models such as ChatGPT, Bard etc. This is a good start but it does not go far enough? We have come to the conclusion that it is not.

Let us take an extreme example. Actually, perhaps it is not that extreme – perhaps this is the norm. An author generates an entire paper using ChatGPT, then they simply acknowledge the use of this large language model.

Now consider the case where an author generates one paragraph using ChatGPT – say 5% of the paper, and then they heavily edit that paragraph. They do the right thing and acknowledge the assistance of ChatGPT.

These two scenarios are obviously very different and yet the acknowledgement could read exactly the same, something bland such as “The use of ChaptGPT 3.5 has been used in assisting the writing of this paper.”

With such a bland acknowledgement, the reader will not know how much of the paper was written with ChatGPT assistance, or which parts of the paper had received help.

We would suggest that something stronger is required. As an opening gambit, we present our suggestion here.

The solution

We suggest that something, above and beyond a simple acknowledgement, is required.

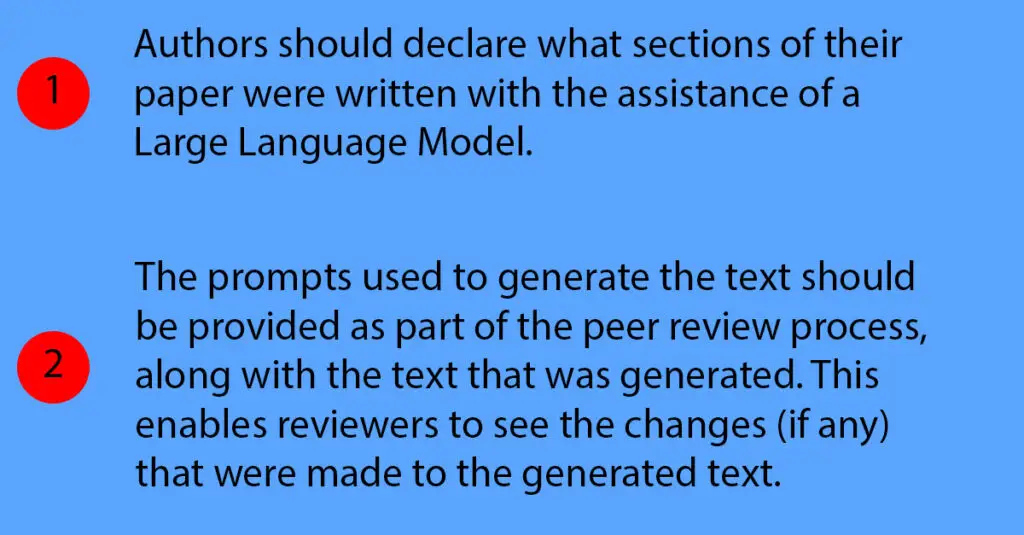

The main thrust of the suggestion is shown in the header image but can be stated as:

🔴An acknowledgement is still required.

🔴In addition, the author(s) are required to supply the ChatGPT prompts that were used as well as the text that this generated. This enables the reviewers to know which parts of the article had help from a large language model and also to see how much that generated text had been edited.

🔴We also suggest that the prompts and generated text are uploaded as supplementary files. These can be made available to the reviewers and also the readers, should the paper be accepted.

These files might be useful to future researchers who might be researching the use of large language models, how this area has developed etc. That is, it might help researchers who are not interested in the research topic the author(s) have written about but could be useful to other research disciplines.

Your view?

We would welcome your views on this suggestion, either via out Twitter (X) account or via email at admin@predatory-publishing.com.